The Trust Stack: A System for Digital CredibilityThe Trust Stack: A System for Digital Credibility

Digital trust isn’t built on brand reputation alone. It’s formed in the moment through experience.

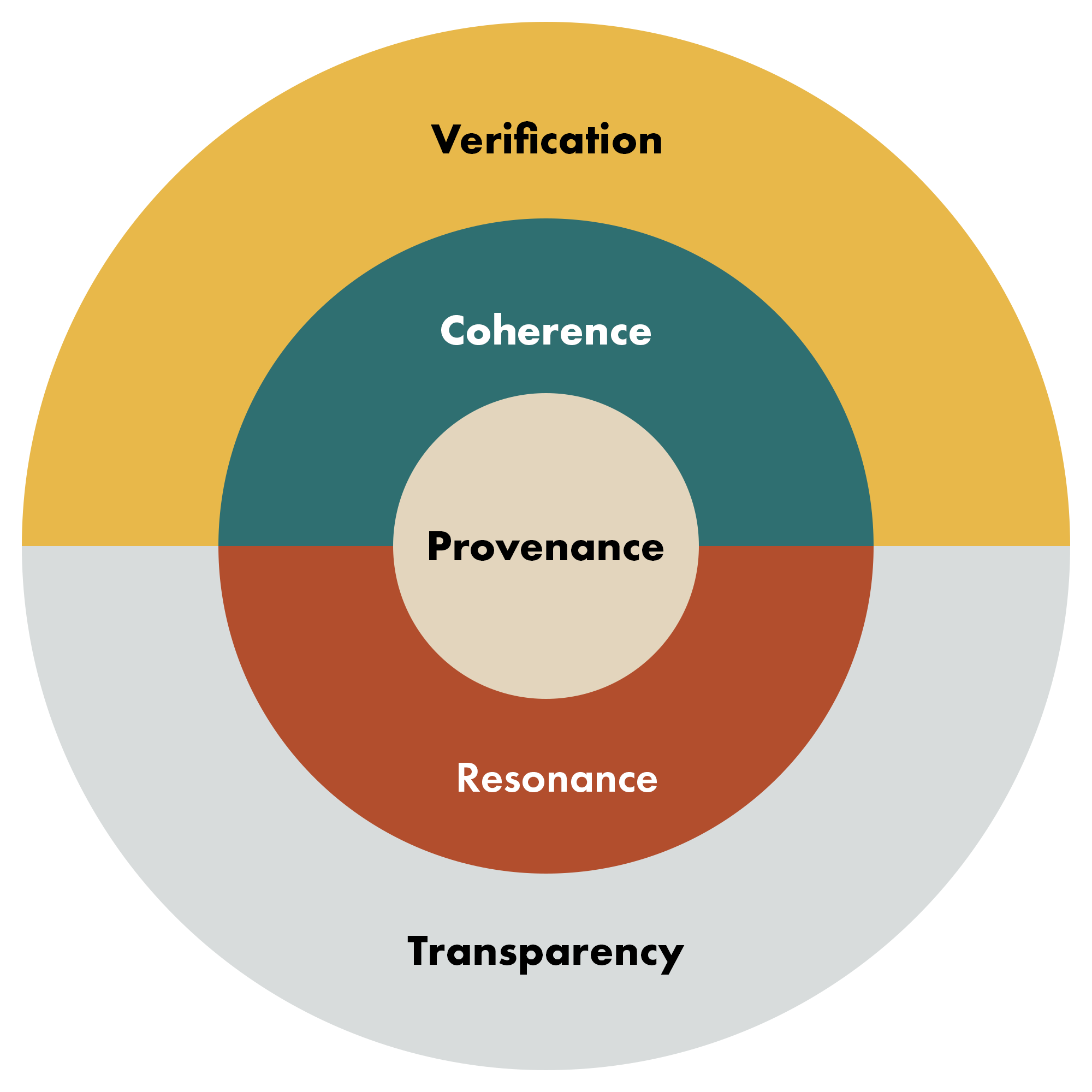

Layers of the Trust StackFive Layers of the Trust Stack

Designing for humans. Encoding for machines.

Each layer strengthens what people perceive and what models can parse.

Machine legibility makes the Trust Stack operational.

We help teams structure digital experiences so credibility signals are clear to both people and machines — enabling confident decisions, accurate interpretation, and real-time trust.

When the Trust Stack is most useful

The Trust Stack is for leaders building digital experiences where trust is no longer automatic and must be carried by the experience itself.

It applies when products are automated, AI-mediated, or abstract, when users hesitate despite strong offerings, or when security and brand equity exist but confidence still breaks down at the moment of interaction.

This most often shows up in AI-driven platforms, regulated industries like finance and health, and established brands navigating new digital and algorithmic environments. The underlying challenge is the same: credibility must be visible, legible, and verifiable in real time.

Ready to apply the Trust Stack to your business? Start with a diagnostic to identify where credibility breaks and what to address first.